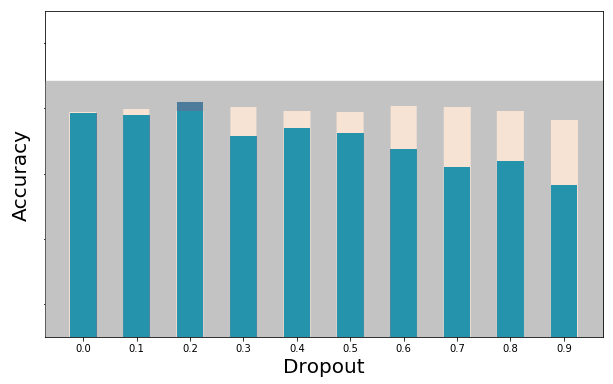

As I continue my adventures in machine learning through the FastAI courses, I wanted to explore the concept of dropout rate. If you would like to see the Jupyter Notebook used for these tests, including full annotations about what/why, check out my machine learning github project. Specifically the Testing Dropout Rates (small images).ipynb.

Really quickly, dropout rate is a method in Convolutional Neural Networks (CNNs) of removing neurons (e.g. in the first layer of an image this would be individual pixels) to prevent overfitting (i.e. doing notably better on the training set than on the validation set) and thus increase the general applicability of the model. In other words, block a percentage of the material to force it to not become to overdependent on repeating patterns that lead it astray.

These tests were setup to isolate dropout rate as much as possible. Also, while this test was using ResNet50, results may differ using a different model. Okay, enough jibber-jabber, let’s jump right to the conclusions, shall we?

Continue reading →